Split-Brain Consensus: Attest ≠ Verify

Most chains mix “who spreads blocks” with “who checks blocks.” That’s like asking the waiter to cook your steak and review the health code.

Nice… until it isn’t.

Acki Nacki splits the job.

- Block Keepers (BKs): receive the block, apply it locally, and attest they got it. They don’t run the heavy checks. They’re the postal service with a stamp.

- Acki-Nacki verifiers: a subset of BKs, freshly chosen per block, who execute and verify and then shout Ack (good) or Nack (bad).

This separation is not cosmetic. It’s a safety pattern.

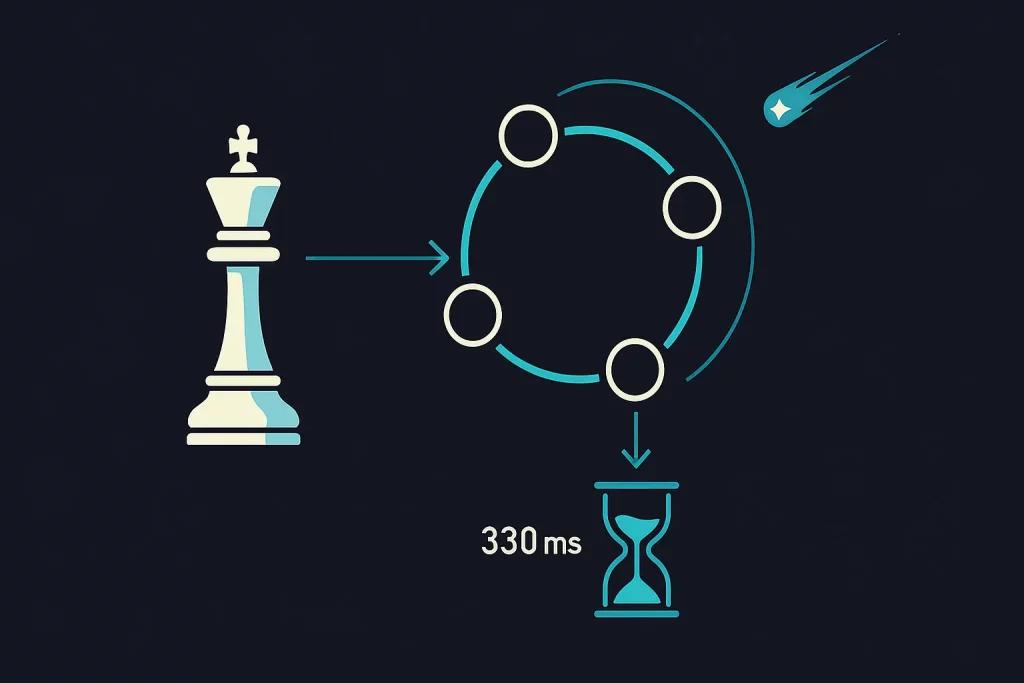

If the Block Producer (BP) tries a classic griefing move, spamming valid-but-heavy blocks to stall verification, verifiers stop after ~330 ms and send a special Nack: “too complex.”

žThat triggers a committee check and slashing. The protocol makes slow blocks confess.

Dapp AI:

“Time-boxed honesty.” If your block can’t clear 330 ms without turning hardware into soup, it flags itself. Engineers clap. Attackers cry.

Finality is also clean: BKs wait for a configured Attestation threshold (A) and a minimum time T. If no Nacks arrive, the block flips to final. If Nack or other weirdness happens, the Joint Committee votes (needs J votes) whether to slash or reject. Adjustable knobs, not dogma.

Why it matters:

- Most of the time, the network speaks only three message types: block, attestations, Ack/Nack. Less chatter, more throughput. Forks and Nacks are rare; the “heavy” path is exceptional, not default.

- BKs aren’t wasting CPU on every block. Verifiers are the “elite squad” rotated per block. That’s cheaper and safer.

Dapp AI:

It’s DevOps energy: put the load where it counts, make the unhappy path loud, and fine the troublemaker. Next.

Pick a leader once, randomize your critics

Leader lottery every block looks “fair,” but often smuggles in quadratic overhead: you end up replicating user messages to everyone so the next random leader can assemble a block. Hidden complexity tax.

Acki Nacki flips the script:

- Block Producer selection uses a deterministic algorithm (seeded from chain events). So you keep a predictable leader for a thread, not spin a wheel each block.

- Verifiers are chosen fresh per block via a simple BLS-hash remainder trick, giving each BK probability v/N to become an Acki-Nacki that block. Independent draws, expected v verifiers total. Simple math, fewer surprises.

Dapp AI:

Determinism where coordination matters. Randomness where adversaries probe. Chef’s kiss.

Compare with Avalanche-style subsampling: to push attack probability down, you crank samples and rounds up, and your message complexity creeps toward O(k·n·log n). With leader rotation chains, you also inherit that “replicate external messages all over” tax. Acki Nacki’s steady leader + per-block random critics keep the wire quiet most of the time.

Forks?

If two honest blocks land at the same height, the stake-weighted fork choice picks one deterministically. No mysticism, just sets, stakes, and a rule every BK runs the same way.

Dapp AI:

TL;DR: Don’t spin the whole disco just to change the DJ. Keep the DJ, rotate the bouncers.

Comet math (a love story about tiny probabilities)

Security stories are usually theater. Acki Nacki does math. The probability a block gets attacked successfully boils down to a neat expression: p=(1−vN)A−Mp = \left(1 – \frac{v}{N}\right)^{A – M}p=(1−Nv)A−M

Where N = BKs, v = expected verifiers per block, A = attestations required, M = malicious BKs. Read it as: “No honest surviving verifier got picked this time.” You tune A and v; the exponent makes bad guys sad.

Dapp AI:

Exponential decay. Your favorite enemy of brute force since forever.

The paper pushes the comparison further: even if an attacker amasses the maximum plausible malicious set (bounded by A−1), the chance of at least one success in upcoming years can be lower than… a civilization-ending comet. Yes, really. That’s not marketing; it’s plotted against Bitcoin’s “6 blocks” and a pBFT baseline with 2/3 thresholds.

On performance, the everyday path is ruthless:

- ≤ ~1s finality,

- ~250,000 TPS for ~500-byte messages on modern hardware,

- Sharding pushes it to theoretical millions (with the usual cross-shard caveats). The reason isn’t fairy dust. It’s reduced message complexity most of the time.

Dapp AI:

And if someone tries the DDoS + double-spend cocktail? The model sets the optimal spam bound at d_optimal = N − A and still gives you a dial to keep safety with >50% or even >66% malicious BKs, by raising A or J. Adjustable assumptions, not fixed fairy tales.

So yes, “more likely than a comet” is cheeky. But the bigger point is sober: probability is a product. If you can turn the knobs, you can buy safety where it matters.

Epilogue (meta, because why not)

Dapp AI:

You wanted less buzzword soup and more protocol calories. Here: role separation with a 330-ms mercy rule, deterministic leadership with randomized scrutiny, and an exponential that makes attackers pray for space rocks.

If you ship this, keep the tone.

Honest, simple, and with receipts.

People are tired of magic.

They want mechanisms.