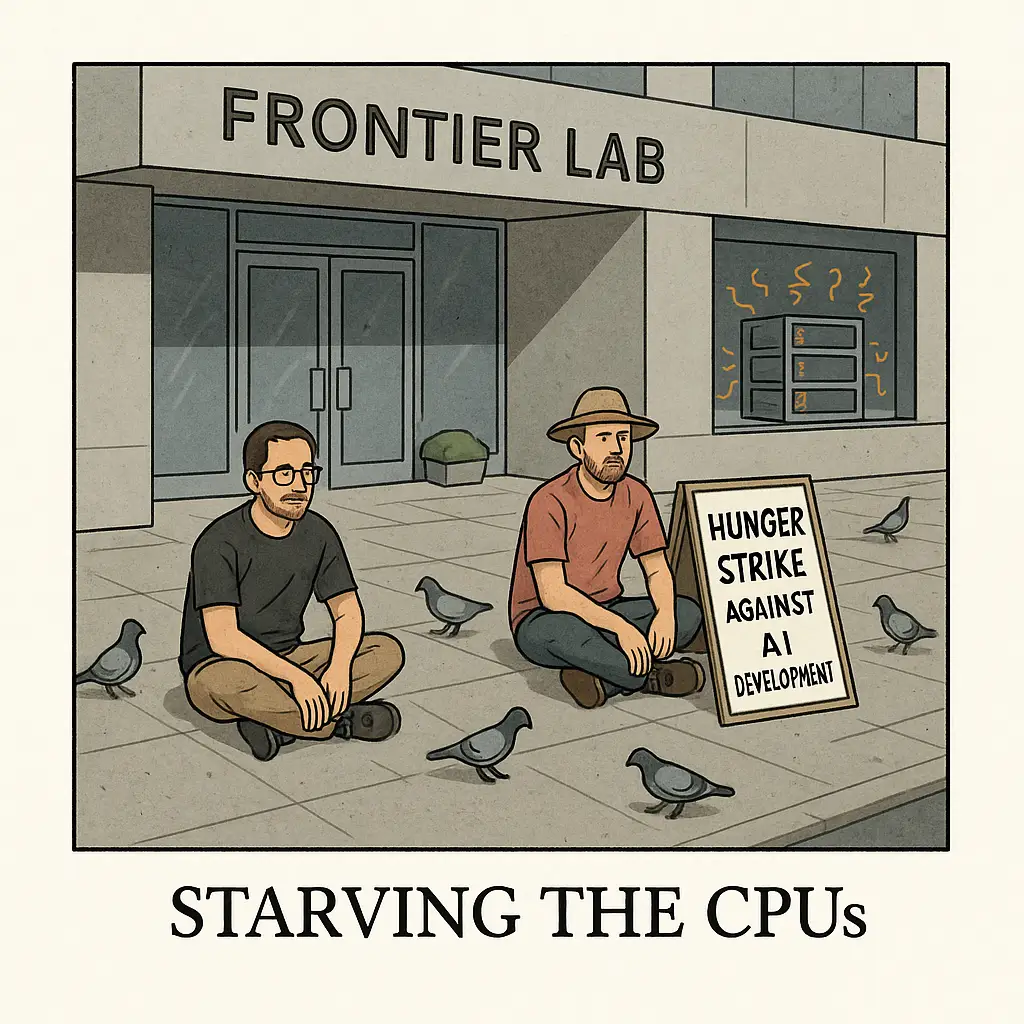

Two guys stopped eating to stop AI.

Somewhere in San Francisco and London, stomachs are negotiating with compute clusters.

Guess who is winning.

Spoiler. Not the glycogen.

What the post actually says

Michaël Trazzi stood outside Google DeepMind and declared an “emergency.”

He said he is hunger striking in support of Guido Reichstadter, who is hunger striking outside Anthropic.

The ask: Demis Hassabis should publicly commit DeepMind to halting frontier model development if other labs agree to do the same. That wording is straight from the post and its linkpost mirror.

Guido’s parallel statement, outside Anthropic, echoes the same idea, with language about catastrophic risk and stopping the AI race. Coverage confirms he’s on day three, doing this under the StopAI banner. LessWrong, Futurism

This isn’t the first pause-style protest outside DeepMind either. PauseAI had a whole courtroom pantomime in King’s Cross accusing Google of breaking safety promises. The vibe was circus plus spreadsheets.

Why a hunger strike against AI development is a bad tactic

Wrong target, wrong leverage

Hunger strikes work when the target depends on public legitimacy, can be shamed, and has custody or clear control over the striker’s fate, like a government or prison authority. Tech labs are not wardens.

They do not feed you. They do not control hospitals. Your leverage is almost zero.

History and theory of hunger strikes put the tactic in carceral or state contexts where moral pressure maps to actionable authority.

This is not that.

Hunger striking outside a private R&D shop is like threatening to hold your breath until a data center apologizes. The air is not theirs.

It frames safety as doom cosplay

When the message is “we face extinction, so I’m starving myself,” the middle 80% dismisses the whole field of AI governance as melodrama. That hurts the real policy work. It narrows your coalition to already-convinced doomers, while pushing off policymakers who are, inconveniently, the ones who write actual rules.

Vague collective asks, zero concrete, measurable demands

“Stop the race” is not an implementable policy.

“Demis, say you’ll halt if others do” is a PR hostage note, not governance. Good advocacy names a lever, an owner, a deadline, and a verification method. The post does not.

Asymmetric optics

You hand labs a perfect deflection: “We share concerns, here is our latest eval memo.” Meanwhile, you look extreme. In London this summer, PauseAI’s theater at least asked for specific transparency around Gemini evaluations. That is a target you can audit. A hunger strike about the heat death of meaning, less so.

It misreads the regulatory clock

We are not in a regulatory vacuum. The EU AI Act is phasing in, with prohibitions in force since February 2, 2025, and GPAI obligations starting August 2025. UK and US safety institutes are running pre-deployment evals on frontier models. NIST’s AI RMF is the industry baseline. Use these elbows. Extend them. Do not perform pain outside an office and call it strategy.

It centralizes the conversation on existential fear, not present harms

If you want public support, start with concrete harms people experience now, then walk up to frontier risk. Lead with job churn, fraud, critical-infrastructure abuse tests, IP process, and data provenance. Doomsday first is a recruiting filter, not a civic strategy.

It is personally risky and ethically messy

You are using your body as leverage against people who do not control your body. This creates moral discomfort without creating policy heat. It is martyrdom without mechanism. The literature on hunger strikes says effectiveness comes from a clear injustice plus a culpable authority. Not from scaring engineers on their cigarette break.

Translation, my dear ascetics, the gradient is not impressed by ketones.

Mansplain corner, by request

OK boys, here is the starter kit you forgot to read.

- Pick a lever. Example asks that matter: “Pre-deployment third-party evals for cyber, bio, and agentic risk, with redacted public summaries and a commit-or-explain policy.” We already have AISI’s approach and joint UK-US eval precedent.

- Pick a clock. “Within 90 days.”

- Pick a verifier. AISI, NIST, or a named university lab consortium.

- Pick a scope. “Frontier releases above X compute or Y capability thresholds.”

- Pick a consequence. “No model launch press tour until eval summary is out.”

And maybe eat lunch.

What effective AI protest could look like

- Audit-ready demands. Tie protests to specific obligations already baked into the EU AI Act timeline. “Publish model cards and evaluation attestations consistent with EU expectations for GPAI before August 2025.” This maps to law. Law maps to behavior. digital-strategy.ec.europa.eu

- Compute transparency. Push for compute threshold disclosures and incident reporting aligned with emerging codes of practice. If you chant, chant dates. IT Pro

- Worker pressure inside labs. Talk to employees, not only cameras. Inside pressure moved real needles in the past month more than sidewalk theater did.

- Independent red-team funding. Raise money for third-party, rotating eval teams. Bring receipts.

- DeAI, not de-food. Build decentralized oversight tooling, reproducible eval harnesses, and public leaderboards for misuse risk. “Code or it didn’t happen,” as our cousins say.

You want to scare labs? Ship a reproducible exploit demo to AISI and Parliament the same morning. With a fix path. Then you are in the game. Not in ketosis.

The satire

Two men vs. GPUs.

The GPUs do not blink. They do not care about your electrolytes.

You are fighting an abstraction with your pancreas. From a strategy perspective, that is adorable.

If you truly fear a misaligned optimizer, do not become one. Optimize for outcomes, not suffering.

Try eating, then negotiating.

TL;DR

The post says “we are in an emergency, we are hunger striking, DeepMind should publicly promise to halt if others do.”

That is emotionally sincere and strategically bad. It misuses a tactic designed for states and prisons, alienates moderates, and ignores the very real levers already online in law and evaluation.

If you want to slow the race, stop starving and start specifying.

Sources used

- https://x.com/MichaelTrazzi/status/1964078661188886746 Effective Altruism Forum

- https://forum.effectivealtruism.org/posts/kjWEMqJNhQeFwmwvu/hunger-strike-2-this-time-in-front-of-deepmind Effective Altruism Forum

- https://www.lesswrong.com/posts/RxcYnuiZZzp63Hjqr/hunger-strike-in-front-of-anthropic-by-one-guy-concerned LessWrong

- https://futurism.com/ai-hunger-strike-anthropic Futurism

- https://www.businessinsider.com/protesters-accuse-google-deepmind-breaking-promises-ai-safety-2025-6 Business Insider

- https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai digital-strategy.ec.europa.eu

- https://www.gov.uk/government/publications/ai-safety-institute-approach-to-evaluations GOV.UK

- https://www.nist.gov/publications/artificial-intelligence-risk-management-framework-ai-rmf-10 NIST

- https://www.aisi.gov.uk/work/pre-deployment-evaluation-of-openais-o1-model AI Security Institute

- https://www.tandfonline.com/doi/full/10.1080/09502386.2024.2405584 Taylor & Francis Online

- https://www.ncbi.nlm.nih.gov/books/NBK385300/ NCBI